|

|

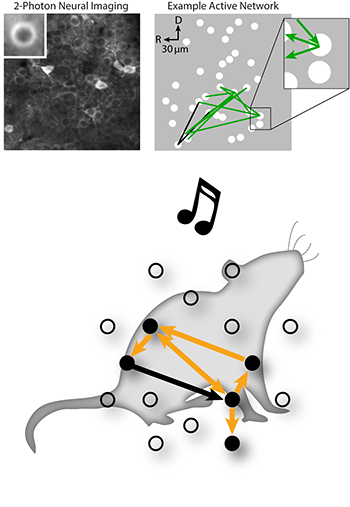

Top: Active neuronal networks during active listening. The left panel shows an example of 2-Photon imaging of neurons in the primary auditory cortex of mice. The right panel shows an example of a neuronal network that appeared only when a mouse correctly detected a sound.

Bottom: Discovering how we listen. The researchers used 2-Photon imaging in mice to discover how neurons become networked during active listening for sounds. The figure shows that when the mice correctly detected sounds, neurons formed small clusters with strong links. |

|

Whether waiting for our number to be called at a deli or for a whistle to start a game, our brains must somehow encode our recognition of behaviorally meaningful sounds. In a new study published in the Feb. 2 issue of Neuron, researchers from the University of Maryland have advanced our understanding of what is going on inside the brain when we listen for familiar sounds.

In “Small Networks Encode Decision-Making in Primary Auditory Cortex,” Patrick Kanold, Nikolas Francis, Daniel Winkowski, Alireza Sheikhattar, Kevin Armengol and Behtash Babadi found that the responses of auditory cortex neurons in mice change when the mice become actively engaged in an auditory detection task, and that subsets of neurons process task-related information in different ways.

The researchers first trained mice to detect simple tones, then imaged the activity of large groups of neurons while the mice performed the tone detection task. The researchers found that when a mouse paid attention to sound, neurons in its auditory cortex became more responsive. Using a novel method to analyze neuronal networks, the researchers also noticed that small clusters of four to five neurons in the auditory cortex formed sparsely connected subnetworks when a mouse correctly detected tones. The data suggest that while many neurons were affected by attending to sounds, only a small number of networked neurons were needed to “encode” the mouse’s behavioral choice.

The results show that activity in auditory cortex is not solely determined by the nature of the sensory signals, but also by internal brain activity related to attention and decision-making. Thus, the auditory cortex not only encodes the acoustic qualities of sound, but also the behavioral meaning of sound and the decisions we make based on what we hear.

This research was supported by the National Institutes of Health BRAIN Initiative and the National Institute on Deafness and other Communication Disorders.

Patrick Kanold is a Professor in the Department of Biology and an affiliate of the Institute for Systems Research. He is the director of the Kanold Laboratory and part of the university's Brain and Behavior Initiative.

Nikolas Francis is a postdoctoral research associate in the Kanold Lab and the Institute for Systems Research.

Daniel Winkowski is an Assistant Research Scientist in the Department of Biology.

Alireza Sheikhattar earned his Ph.D. in Biology in 2017, advised by Behtash Babadi. Currently he is a research intern at the Howard Hughes Medical Institute’s Janelia Research Campus.

Kevin Armengol is a Ph.D. student in the Program in Neuroscience and Cognitive Science. He is advised by Patrick Kanold.

Behtash Babadi is an Assistant Professor in the Department of Electrical and Computer Engineering and an affiliate of the Institute for Systems Research.

Related Articles:

Kanold study in Neuron: A short stay in darkness may heal hearing woes

New UMD Division of Research video highlights work of Simon, Anderson

Two ECE Graduate Students Win 2023 UMD Three Minute Thesis Competition

Jonathan Simon gives keynote address at international cognitive hearing science conference

How does the brain turn heard sounds into comprehensible language?

Internal predictive model characterizes brain's neural activity during listening and imagining music

Study: brain’s circuitry can be altered even in adulthood

Simon invited speaker at implantable auditory prostheses conference

UMD auditory cortex research featured in Nature Neuroscience

Stop—hey, what’s that sound?

February 2, 2018

|