Automated Action Selection Policy Synthesis for Unmanned Surface Vehicles Using Virtual Environments

Main Participants: Satyandra K. Gupta, S. Lubard, R. Kavetsky, P. Svec, M. Schwartz, and A. Thakur

Sponsors:This project is sponsored by Office of Naval Research and NSF.

Keywords: Artificial Evolution, Policy Synthesis, Unmanned Surface Vehicles, and Robotics

Motivation

Traditionally, innovation and discovery has been the domain of highly creative individuals who rely on their intuition and insights to develop new knowledge, ideas, concepts, and products. Over the last two decades, the advent of information technology has significantly influenced all facets of the engineering practice. So a natural question is Ė what role can computers play in the innovation and discovery process? Recent advances in the high fidelity simulations enable us to do an accurate analysis of proposed solution. The advent of low-cost, high-performance computing architectures enables us to explore a very large number of solutions in a short period of time. Advances in procedural representations enable us to automatically generate complex candidate solutions. Hence, our premise is that high fidelity simulations can now facilitate innovation and discovery process.

A major issue in the development of increased autonomy for robotic vehicles such as unmanned surface vehicles (USVs) is the time and expense of developing the software necessary to handle a large variety of missions and all the variations in the encountered environments. This is a truly challenging task and requires writing a large amount of lines of code by human programmers.

We have developed a new approach for developing planning software that operates autonomous USVs. This new approach takes advantage of the significant progress that has been made in virtual environments and machine learning. The basic idea behind our approach is as follows. The USV explores the virtual environment by randomly trying different moves. USV moves are simulated in the virtual environment and evaluated based on their ability to make progress toward the mission goal. If a successful action is identified as a part of the random exploration, then this action will be integrated into the policy driving the USV.

We anticipate that there may be portions of the mission, where trial and error alone will not be adequate to discover the right decision rule. In such cases, two additional approaches are utilized to make progress in acquiring the right policy. The first approach involves seeding the system with the policy employed by humans to solve a challenging task. The second approach is to restrict the action space based on some type of feasibility criteria.

We focus specifically on automated synthesis of action selection policy used for blocking the advancement of an intruder boat towards a valuable target under the assumption that the intruder's attacking policy is fully classified and thus can be modeled. The important requirement on the intruder's policy is that it is human-competitive in the sense that its efficiency approaches the efficiency of strategies exhibited by human-driven intruders.

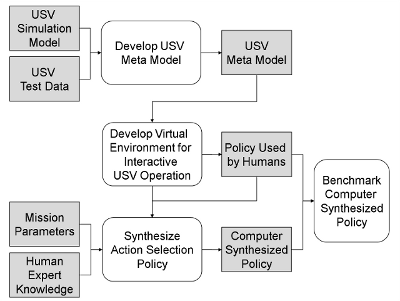

An overview of our overall approach is shown in Figure 1. The first major component of our approach is development of a physics-based meta-model. High fidelity simulation of USV is computing-intensive and cannot be used for discovering decision rules or trees used in planning. We have developed a meta-model by conducting off-line simulations of the USV in the sea. This simulation accounts for wave and USV interactions. The meta-model provides information about turning radius, steady state velocity, and acceleration as a function of rudder angle and throttle position.

We have developed a mission planning system whose main part is an evolutionary module for evolving planning decision trees. We used this system to automatically generate decision trees expressing blocking policy for the USV. This means that instead of automatically generating a program composed of low-level controller actions (steer left/right, go straight), we generate a program represented as a decision tree that consists of high-level controllers as building blocks (go in front of the intruder, etc.) together with conditionals and other program constructs.

We have also developed a virtual environment-based visualization system (see Figure 2) which serves as an emulator of the real USV environment and contains gaming logic which allows human players to play against each other or against the computer. In the game, the player controlling the intruder boat must reach a protected target, while the player controlling the USV must block and delay the intruder as long as possible. The game logic is responsible for the rules of the game, game logging and replay, boat behaviors, and scoring. The game can be played on two computers over a network. In addition to offering basic gaming capabilities, the visualization system provides collision detection and basic physics to the objects in the scene.

Deployment of autonomous USVs in critical missions requires that the performance of the autonomous system matches with that of a remote controlled vehicle. We have used the virtual environment based game to compare the automatically discovered decision trees representing the blocking policy to the strategies used by human players. Our goal was to (1) automatically generate USVís blocking policy against a specific hand-coded human-competitive intruder, and (2) compare its efficiency to the blocking policy exhibited by human operators when pit against the same intruder.

|

|

|

|

|

Action Selection Policy Executor Architecture

The complexity of interactions of a mobile robotic system suggests structured (non-monolithic) high-level controller architecture. The unmanned boats must behave based on the effect of several independent threads of reasoning. This is due to the highly parallel nature of events and processes in the real world. The high-level controller architecture can meet this requirement if it is modular, and when the modules can act simultaneously in a coordinated cooperation.

The navigation system resides inside the USV and consists of perceptual, reasoning / planning, localization, and behavioral components. The USV's action selection policy executor itself is reactive which means that it interprets and executes policy in a strict timely fashion. The final product of the execution is a sequence of action commands taking over the controller for a certain period of time.

The reactive policy is a stored human-readable structure producing different motor actions in different situations. Its representation is a decision tree consisting of different high-level action controllers, conditional rules, standard boolean values and operators, conditional variables, program blocks, and velocity commands. The structure of a high-level controller is based on the behavior-based subsumption architecture. This architecture decomposes a complicated high-level intelligent behavior (a high-level controller in our case) into a set of more solvable and simple behaviors (steer left / right, go straight, arrive) organized into layers. These primitive behaviors are finite state machines acting in response to sensor inputs and producing motor action outputs. The high-level controllers, which are the main building blocks of a policy, can be parameterized. The parameters of a high-level controller define its underlying property. For instance, for the go-intruder-front controller, one of the parameters defines the USV's relative goal position (in polar coordinates) in front of an intruder. This effectively allows the USV to cover all feasible positions, as defined by its policy around the intruder.

The inputs into the action selection policy executor are sensor data, description of mission parameters, and the USV meta model itself. The reactive obstacle avoidance behavior which is a basic part of all high-level controllers utilizes a virtual sensor field divided into four sensor cone areas (front left, front right, left, right). In addition to this virtual short-range sensor, the USV has a radar sensor on-board using which it can compute the state of the intruder boat (distance, direction, velocity, etc.). The state of the intruder boat is then directly used by conditionals inside the USV policy when the policy is executed.

The outputs are translation and steering velocities for a low-level USV controller that are directly translated into motor commands for device drivers of a particular actuator. At any particular moment, the policy executor can be in only one state. The policy interpreter takes the currently used policy as an input and produces action outputs. Based on the current sensor data readings and the fact that the executer finished performing previously activated high-level controller, only one new high-level controller inside the policy can be activated. This high-level controller consists of multiple primitive behaviors and decides which one is used to produce the ultimate action output.

Development of Physics-based Meta Model

We used potential flow theory based technique for simulating the flow of ocean wave. The potential flow theory assumes the flow to be irrotational, incompressible, and inviscid. There are two boundary conditions on the partial differential equation, namely, (1) Kinematic boundary condition stating that the fluid on free surface remains always on the free surface; (2) Dynamic boundary condition stating that the pressure on the free surface is same as the atmospheric pressure. The partial differential equation is solved to obtain the velocity potential and the ocean wave surface equation. The velocity potential is used along with Bernoulliís law to obtain the dynamic pressure head at any arbitrary point and any given time in the ocean. In order to consider the effect of USV motion on the ocean wave flow, we used added mass model. In practice the added mass and the damping matrix are determined by performing parameter identification from the experimental data. Using the described model we implemented a dynamics simulator for the USV.

Unlike estimating the restoring force vector and wave force vector using an approximated simplified formula, we computed the instantaneous forces and moments by actually intersecting the USV geometry with the wave surface at the given location and time of the USV resulting into a more accurate estimation of restoring force and moments. To compute the environment force we only considered the wave forces and ignored the effects of wind. We discovered however that the simulation technique described is computationally expensive because at every time step it performs the geometric computation of the wet area of USV and the surface integration over the wet area to compute the force and torques. We developed automatic model simplification algorithms based on spatial clustering, temporal coherence of forces, and parallelization to reduce the computation time in the simulation. The simplification approach made the simulation to run in real time.

Automated Synthesis of Decision Tree for Blocking

|

|

USV Protects Area Containing Oil Tanker |

Our solution to the problem of reactive planning was the use of a computer simulated evolution based on the Darwinian principles of survival and reproduction of the fittest. Using this phenotype evolutionary process, we were able to evolve the actual decision tree representing an action selection policy. The specific evolutionary method we used is the strongly-typed generational genetic programming (GP). This is a robust stochastic optimization method that searches a large space of candidate hypotheses (programs) while looking for the one with the best performance (fitness value). During the evolution itself, a population of USV decision trees is being stochastically transformed into a new population with a better average fitness value using standard evolutionary operators like crossover and mutation.

We were particularly interested in automatically discovering a blocking policy for slowing down the movement of an intruder toward the protected object. We assumed that the intruder boat utilizes a specific policy which can be classified. This specific policy is assumed to be human-competitive meaning that its performance is approaching the performance of human driven intruders.

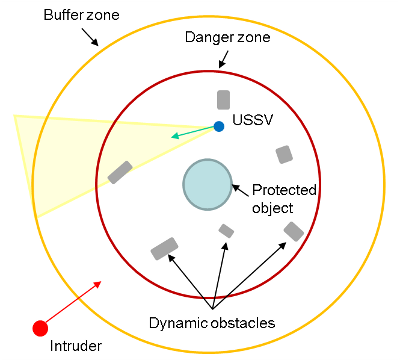

The USVís policy for blocking is defined in the context of a simple mission. During this mission, the USV must protect an oil tanker by patrolling around it while avoiding collisions with friendly boats and scanning the environment for a possible intruder. The environment around the oil tanker is divided into danger and buffer zones (see Figure 3). Once the intruder enters the buffer zone, the USV approaches the intruder boat and circles it for surveillance purposes. If the intruder enters the danger zone, the USV does its best to block the intruder, slowing the intruderís progress toward the protected object.

During the evolutionary process, a newly created USV individual is evaluated against a particular human-competitive intruder in four different test scenarios. In each scenario, the intruder has a different initial orientation and distance from the target, and the USV always starts from an initial position closer to the target. The evaluation lasts for a maximum amount of time steps which equals to five minutes in real time. The maximum speed of the USV is set to be 10% higher than the speed of the intruder.

Visualization System

As part of this work we have developed a virtual environment-based visualization system. The purpose of the visualization system is twofold: to evaluate the automatically generated blocking policies represented as a decision tree and to serve as an emulator of the real environment in which the USV will operate.

A virtual environment can be a useful tool for prototyping of USV action selection policies and the navigation system. Use of a virtual environment, especially in the early stages of a project, can speed up the development of the initial navigation system because it allows one to avoid performing a lot of testing of the generated policies in the real world with real boats and numerous real world problems. In the early stages, a lot of progress can be made by simulating the real world with a virtual environment and using the virtual environment to simulate much of the data and sensors needed by the navigation system to generate effective mission policy. Later on, real sensors, real boats, and real water can be substituted for the virtual environment to provide a more realistic scenario for the navigation system. As long as the interfaces are clearly defined, it will not matter to the navigation system whether the data is coming from the virtual environment or from the real world. For these reasons, the virtual environment is used in this project as an emulator of the real environment.

The other function of the visualization system is to allow human versus computer testing. The visualization system is capable of running a game in which human players are pitted against the computer driven virtual boats. This allows some rough verification of the generated mission policies. Human players can take control of an intruder boat and try to reach a protected target while a USV, driven by computer generated policy, can carry out blocking policies to try to prevent the human players from reaching their goal. Various measurements can then be made (e.g. time, distance) to assess the effectiveness of the generated blocking policies.

The visualization system offers a realistic 3D immersive world by implementing physics based scene, incorporating rigid body dynamics, waves, and dynamic obstacles. The virtual environment also handles user input. User input consists of keyboard strokes, mouse clicks and movements, or a Microsoft XBox controller interface. The XBox controller offers a very intuitive and familiar user interface, especially for the experienced gamers. It is important to make the user interface as intuitive and as simple as possible so that the performance of the human operators, when compared to the performance of the computer reactive policy, is not biased by a poor user interface. The XBox interface allows the human player to not only control a boat, but also control the translation and rotation of the view and switch between different vantage points.

All the boats in the environment are represented with realistic 3D models created using a CAD tool. The ocean is rendered using a dynamically generated triangulated mesh that is linked to a height map. The same height map is used to calculate bobbing motion for all the boats in the environment. Up to a certain distance from the view point, the triangles which make up the ocean surface are constantly updated and redrawn based on the height map queries. The dynamic ocean mesh was broken up into a grid of tiles, where each tile represents an independent object. As the userís view moves and rotates, the virtual environment uses an efficient method to check which tiles are in view and which tiles are no longer within the view angle. Tiles which are not within the view angle are made invisible to prevent the system from rendering unnecessary triangles. The dynamic ocean implementation also supports multiple levels of detail (LOD). Ocean tiles with high LOD level have more triangles than those with a low LOD level. The detail level increases for the tiles that are closer to the view and decreases for those that are far from it. When the system signals an ocean tile to change its LOD level, the triangulated mesh for a specific tile is regenerated.

The game policy is responsible for the rules of the game, game logging and replay, boat behaviors, and scoring. Players must navigate the boats around various obstacles to perform their respective missions. Once models of all the boats are loaded into the scene, a physics-based dynamics model is used to govern the boat behavior. In human versus human mode the game is played on two computers over a network using User Datagram Protocol (UDP) and a client-server architecture. The game also supports the concept of a limited visual range. Each player is only able to see objects which are within a certain configurable radius of the boat they are driving.

The visualization system supports collision detection for all objects in the environment so that objects are not allowed to pass through each other. Some basic boat physics has been implemented to deal with boat collisions. When two boats collide, they deflect each other depending on the collision point for each boat, each boatís velocity, mass, and each boatís direction of travel. The deflection involves both translation and rotation. Upon collision, each boat receives a certain amount of damage. Too much damage results in the sinking of the boat. The computer driven boat is equipped with visibility sensors used for avoiding obstacles.

The visualization system also supports logging so that it can be played back from different perspectives for visual analysis and inspection. The logging system records the movements of all objects during a game.

The boats serving as obstacles have randomly generated structured motion. During the initialization of the environment, a randomly generated sequence of actions, as well as their duration, is built for each boat.

Summary

We have developed a system for automatically generating reactive action selection policies for USVs. A policy is represented as a decision tree which consists of high-level controllers as building blocks, conditionals and other program constructs. We used strongly-typed GP-based evolutionary framework for automatic synthesis of policy for blocking the advancement of a computer-driven intruder boat toward a valuable target. We assumed that the intruder boat utilizes a specific human-competitive policy, which can be classified and thus modeled.

Manual hand-coding of USV action selection policies, which are able to face even a very specific hand-coded human-competitive intruder, is a very challenging task. The usage of evolutionary systems for generating the policy automatically by only providing a set of functional primitives and mission goals holds a great promise. Nevertheless, this must be supported by extensive involvement of human expert knowledge in the process to decide not only what functional policy primitives to use but also how to decompose the problem, especially when generating policy for more complex tasks.

We have built a physical infrastructure for testing radio controlled boats and started evaluating the generated policy in a real environment. Testing in a real environment adds some additional challenges due to the differences with the virtual environment (e. g. inaccurate sensors). We are continuously improving the virtual environment based boat simulations (high and low-level controllers, environment dynamics, sensors, etc.) inside the mission planning system in order to be able to generate a policy reflecting the real world dynamics.

Related Publications

The following papers provide more details on the above-described results.

- P. Svec, S.K. Gupta. Automated planning logic synthesis for autonomous unmanned vehicles in competitive environments with deceptive adversaries. New Horizons in Evolutionary Robotics: post-proceedings of the 2009 EvoDeRob workshop, Studies in Computational Intelligence, Springer (Accepted for publication).

- M. Schwartz, P. Svec, A. Thakur, and S.K. Gupta. Simulation based synthesis of planning logic for autonomous unmanned sea surface vehicles. Simulation Driven Innovation and Discovery, Energetics Applications (Accepted for publication).

- P. Svec, A. Thakur, D.K. Anand, S.K. Gupta, and M. Schwartz. A

simulation based framework for discovering planning logic for

autonomous unmanned surface vehicles. ASME

Engineering Systems Design and Analysis Conference, Istanbul,

Turkey, July, 2010.

- A. Thakur and S.K. Gupta. A computational framework for real-time

unmanned sea surface vehicle motion simulation. ASME Computers in Engineering Conference,

Montreal, Canada, August 2010.

- P. Svec and S.K. Gupta. Competitive Co-evolution of high-level

blocking controllers for unmanned surface vehicles. Exploring New Horizons in Evolutionary

Design of Robots Workshop, International Conference on Intelligent

Robots and Systems (IROS '09), October 2009.

- M. Schwartz, P. Svec, A. Thakur, and S.K. Gupta. Evaluation of

automatically generated reactive planning logic for unmanned surface

vehicles. Performance Metrics for

Intelligent Systems Workshop, Gaithersburg, MD, September 2009.

- A. Thakur and S.K. Gupta. Context dependent contact preserving

off-line model simplification for interactive rigid body dynamics

simulations. ASME Computers and

Information in Engineering Conference, August 30-September 2,

2009, San Diego.

Contact

For additional information please contact:

Dr. Satyandra K. Gupta

Department of Mechanical Engineering and Institute for Systems Research

University of Maryland

College Park, MD 20742

Office: 2147 AV Williams Building

Phone: 301-405-5306

FAX: 301-314-9477

![]()

WWW: http://terpconnect.umd.edu/~skgupta