|

|

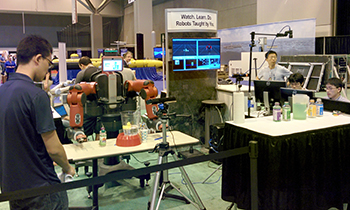

ARC Lab students with the Baxter robot salad-making demonstration at the DARPA Forum in St. Louis. |

|

UMIACS Associate Research Scientist Cornelia Fermüller is the principal investigator and Professor John Baras (ECE/ISR) and ISR-affiliated Professor Yiannis Aloimonos(CS/UMIACS) are the co-PIs on a three-year, $800K NSF Cyber-Physical Systems grant, ?MONA LISA - Monitoring and Assisting with Actions.? The research will be conducted in the Autonomy Robotics Cognition (ARC) Laboratory.

Cyber-physical systems of the near future will collaborate with humans. Such cognitive systems will need to understand what the humans are doing, interpret human action in real-time and predict humans' immediate intention in complex, noisy and cluttered environments.

This research will develop a new three-layer architecture, motivated by biological perception and control, for cognitive cyber-physical systems that can understand complex human activities, focusing specifically on manipulation activities.

At the bottom layer are vision processes that detect, recognize and track humans, their body parts, objects, tools and object geometry. The middle layer contains symbolic models of human activity. It assembles through a grammatical description the recognized signal components of the previous layer into a representation of the ongoing activity. Cognitive control is at the top layer, deciding which parts of the scene will be processed next and which algorithms will be applied where. It modulates the vision processes by gathering additional knowledge when needed, and directs attention by controlling the active vision system to direct its sensors to specific places.

Thus, the bottom layer is perception, the middle layer is cognition, and the top layer is control. All layers have access to a knowledge base, built in offline processes, which contains semantics about actions.

The feasibility of the approach will be demonstrated through developing a smart manufacturing system, called MONA LISA, which assists humans in assembly tasks. This system will monitor humans as they perform assembly tasks, recognize assembly actions, and determine whether they are correct. It will communicate to the human possible errors and suggest ways to proceed.

The system will have advanced visual sensing and perception; action understanding grounded in robotics and human studies; semantic and procedural-like memory and reasoning, and a control module linking high-level reasoning and low-level perception for real time, reactive and proactive engagement with the human assembler.

The research will bring new tools and methodology to sensor networks and robotics and is applicable to a large variety of sectors and applications, including smart manufacturing. Being able to analyze human behavior using vision sensors will have an impact on many sectors, from healthcare and advanced driver assistance to human-robot collaboration.

Related Articles:

John Baras gives invited lecture at workshop on control of CPS systems

In memoriam: Dr. Radhakisan Baheti, NSF ECCS Program Director

Alum Dipankar Maity joins ECE faculty at University of North Carolina Charlotte

ARC Lab holds inaugural open house

Workshop honors alum Naomi Leonard

Oct. 13-14: Workshop on New Frontiers in Networked Dynamical Systems: Assured Learning, Communication & Control

ECE and ISR alumni feature prominently at American Control Conference

Alum Sean Andersson named Mechanical Engineering Department chair at Boston University

Baras, Sadler part of large ARL DataDrivER project

Remembering Roger Brockett, 1938-2023

September 16, 2015

|