|

|

|

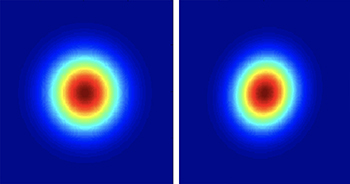

Receptive fields of neurons before and after exposure to stimulus. |

|

A new paper by Professor Shihab Shamma (ECE/ISR), Kai Lu, Wanyi Liu, Kelsey Dutta and Jonathan Fritz has been published in the Journal of Neuroscience.

Natural sounds such as vocalizations often have co-varying attributes where one acoustic feature can be predicted from another. This results in redundancy in neural coding. Scientists have proposed that sensory systems are able to detect this covariation and adapt to reduce redundancy and produce more efficient neural coding.

This new research, “Adaptive efficient coding of correlated acoustic properties,” explores the neural basis of the brain’s auditory system adapting to efficiently encode two co-varying dimensions as a single dimension, at the cost of lost sensitivity to the orthogonal dimension.

The researchers’ neurophysiological results are consistent with the Efficient Learning Hypothesis proposed by Barlow in 1961. Barlow posited that the neural code in sensory systems efficiently encodes natural stimuli by minimizing the number of spikes to transmit a sensory signal. The new study adds to previous research exploring the brain areas and neural mechanisms underlying implicit statistical learning. The results and may deepen understanding of how the auditory system represents acoustic regularities and covariance.

Related Articles:

Study: brain’s circuitry can be altered even in adulthood

New UMD Division of Research video highlights work of Simon, Anderson

How does the brain turn heard sounds into comprehensible language?

Internal predictive model characterizes brain's neural activity during listening and imagining music

Chapin, Bowen win in Bioscience Day poster session

AESoP symposium features speakers, organizers with UMD ties

UMD auditory cortex research featured in Nature Neuroscience

Stop—hey, what’s that sound?

Five ISR faculty part of $8 million NIH grant to combat hearing loss in older people

Jonathan Simon is invited speaker at CHScom 2015

September 17, 2019

|