|

|

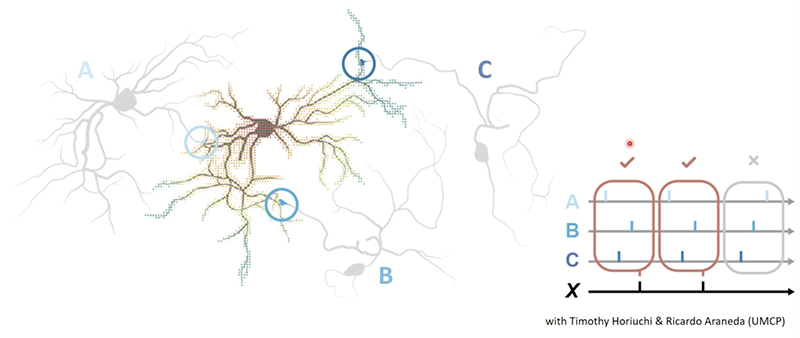

Click image to enlarge. Programming a single neuron to respond selectively to one pattern out of many. Source: Pamela Abshire Distinguished Scholar-Teacher Lecture, Oct. 29, 2021. Image courtesy P. Abshire, T. Horiuchi, R. Araneda.

|

|

Biological systems—brains, for example—do the same things computers do. They engage in goal-oriented activity. They find, gather, process, structure and manage information. They solve problems. Networks of neurons in brains are remarkably fast and efficient at solving pattern recognition and classification problems. In fact, they are far better at dealing with challenges like these than the most sophisticated computers.

One of the reasons scientists want to better understand how the brain works is so they can develop better algorithms and engineering models to increase the speed and abilities of computers. So far, however, researchers have made only limited progress in understanding what underlies the brain’s remarkable capabilities. For example, they do not yet understand the physical mechanisms inside a living neuron and its networked neighbors.

Now $2,949,109 in National Science Foundation funding will help University of Maryland researchers explore the “rules of life” of neuronal networks.

Professor Pamela Abshire (ECE/ISR) is the principal investigator and ISR-affiliated Associate Professor Timothy Horiuchi (ECE) and Professor Ricardo Araneda (Biology) are the co-PIs for Learning the Rules of Neuronal Learning, a five-year grant in NSF’s Emerging Frontiers “Understanding the Rules of Learning” program. The project will begin Jan. 1, 2022.

The researchers will bring together recent technological advances in patterning, electrical recording, optical stimulation, and genetic manipulation of neurons to study how to nurture a healthy culture of neurons while continuously observing and stimulating them at fine scale. They hope to uncover how the individual parts of a single neuron contribute to the overall learning and computation of the neural network.

While attempts in this direction have been made in the past, earlier researchers experienced technical limitations that left them working on too large a scale. “The scale of the interface was not fine enough,” Abshire says. “They didn’t have the detailed ability to measure and stimulate specific locations within the neuronal network. Now with new tools we can do this.”

“We want to program a single neuron to respond selectively to one pattern out of many,” Abshire says. “We will use the training mechanisms that are already inherent in neurons to program a pattern to which we want the neurons to respond.”

While understanding how sophisticated networks of neurons work is a future goal, working with simple neurons and sparse networks must come first, Abshire says. “When the effort is scaled up beyond a few neurons, the interactions become very complicated.”

The goal is a proof-of-concept demonstration of a programmable computation by neurons in an engineered microenvironment.

The research should have significant implications for the scientific understanding of natural neuronal computation, and would introduce a completely new set of engineering tools for interacting with living neurons and exploring what is computationally possible. Ultimately, new understanding about the neuronal rules of life will affect work in artificial intelligence, robotics, and neural prosthetics.

Learn more. Watch Pamela Abshire’s Distinguished Scholar-Teacher lecture on “What can cells teach us about computing?”—the subject of the new NSF grant.

Related Articles:

Autism Research Resonates in Hearing-Focused Project

Poster Session Cinches Banner Year for UMD Neuroscience

Shamma joins former student Mounya Elhilali in new MURI soundscape project

Abshire, Ernst receive MPower seed grant for using AI in understanding chronic pain biomarkers

Announcing the BBI Small Animal MR Facility

Frequent research collaborator Deanna Kelly named 'MPower Professor'

How Home Alone Helped UMD Neuroscientists Unlock Brain Scan Data

Cornelia Fermüller is PI for 'NeuroPacNet,' a $1.75M NSF funding award

Babadi, Chellappa contribute to Springer reference work on computer vision

A learning algorithm for training robots' deep neural networks to grasp novel objects

November 4, 2021

|